published by linux.com on June 24, 2008

With optical character recognition (OCR), you can scan the contents of a document into a single file of editable text. This article, which focuses on scanning books, describes the steps you need to take to prepare pages for optimal OCR results, and compares various free OCR tools to determine which is the best at extracting the text.

First, fire up your distribution’s package manager to fetch a few packages and dependencies. In Debian, the required packages are sane, sane-utils, imagemagick, unpaper, tesseract-ocr, and tesseract-ocr-eng. You may also install other language packs for Tesseract — for example, I installed tesseract-ocr-deu for German text.

Scanning the pages

Before you can translate images into text, you have to scan the pages. If you want to scan a book, you can’t use an automatic feed for your scanner. The following small bash/ksh script scans pages one at a time and outputs each to a separate file in portable anymap format called scan-n.pnm:

for i in $(seq --format=%003.f 1 150); do echo Prepare page $i and press Enter read scanimage --device 'brother2:bus1;dev1' --format=pnm --mode 'True Gray' --resolution 300 -l 90 -t 0 -x 210 -y 200 --brightness -20 --contrast 15 >scan-$i.pnm done

Adjust the parameters of the scanimage command according to your scanner model (find out which device names you can use with scanimage -L and look up device-specific options with scanimage --help --device yourdevice). Also, adjust the settings for the parameters -l (discard on the left), -t (discard on the top), -x, and -y (the X and Y coordinates on the bottom right corner of the page). Try to position the book in a way that makes it possible to use these parameters to define a rectangle that contains only the text, not the binding or the border. Don’t worry about the page number; you can cut it out later with little effort.

Your scans may not be positioned consistently or have shadows in the corners. If you feed these images into an OCR program, you won’t get accurate results no matter how good the OCR engine might be. However, you can use the unpaper command before applying the OCR magic to preprocess the image and thus get the text recognized more accurately. If you scanned the pages in the right orientation — that is, right side up — you can use the default settings with unpaper; otherwise, you can use some of the utility’s many options. For example, --pre-rotate -90 rotates the image counterclockwise. You can also tell unpaper that two pages are scanned in one image. See the manual page for detailed information. The following unpaper script prepares the scanned images for optimal OCR performance:

for i in $(seq --format=%003.f 1 150); do

echo preparing page $i

unpaper scan-$i.pnm unpapered-$i

convert unpapered-$i.pnm prepared-$i.tif && rm unpapered-$i.pnm

doneYou need to convert the scans from .pnm files because the best OCR tool I have found requires the TIFF input format.

Comparing OCR tools

Now comes the most important part: the automated optical character recognition. Many open source tools are available for this job, but I tested a selection and found that most didn’t produce satisfactory results. This is not a representative survey, but it is clear that some open source tools perform far better than others.

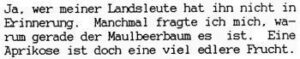

To illustrate, I have prepared a small example from a German book written by my wife’s grandfather. The following figure shows the original text. It’s a smaller version of the original 300 DPI scan that I fed to the OCR programs.

GOCR produced the following results:

Ja, wer _einer __leute hat ihn njcht jn

_3meg. Menc_al fra_e 3ch _jch, wa-

_ gerade der Maulbeerba_ es 3st. Ejne

Aprikose ist doc.h eine vjel edlere m3cht.

Ocrad provided the following:

Ia, Her meiner _leute hat ihn nicht in

_iMe_. Mònchmal fragte ich mich, Na-

nm gerade der Maulbeerbaum es ist. Eine

Rpyik_e ist doch eine viel edlere nvcht.

I used the -l deu option with Tesseract-OCR to select the German word library, which resulted in the following:

Ja. wer meiner Landsleute hat ihn nicht in

Erinnerung. Manchmal fragte ich mich, wa-

rum gerade der Maulbeerbaum es ist. Eine

Aprikose ist doch eine viel edlere Frucht.

Of the three, Tesseract-OCR worked the best, making only one mistake: it interpreted the comma in the first line as a period. Therefore, I made Tesseract-OCR my tool of choice. This simple script uses that application to apply OCR to every scanned page:

for i in $(seq --format=%003.f 1 150); do echo doing OCR on page $i tesseract prepared-$i.tif tesseract-$i -l eng done

The result of that process is a bunch of text files that each represent the contents of one page.

Putting it all together

Before you create a consolidated document, you’ll want to remove any page numbers that still exist in your text files. If they’re located above the text, you can strip the first line from every text file that Tesseract-OCR produced:

for i in $(seq --format=%003.f 1 150); do tail -n +2 tesseract-$i.txt >text-$i.txt done

If they are below the text, just use head -n -1 in the above script instead of tail -n +2. This causes the script to remove the last line and not the first.

Finally, use cat text-*.txt >complete.txt to create one big file containing your whole book. Edit the resulting file and unhyphenate the whole text by replacing each combined occurrence of a hyphen and a line feed with an empty string. You can also remove unnecessary line feeds. In gedit, you can define your own tools and make them available via a keyboard shortcut. I defined the following tool to work on the current selection:

#!/bin/sh # newlines to spaces tr '\n' ' ' # only one space character at a time sed 's/[[:blank:]]{2,}/ /'

With this, you can select some lines and press your defined shortcut. The whole selection becomes one line.

You now have one large document that represents the contents of the book. Consider reading the whole file again to find any typo that may be left, and then moving on to LaTeX to create a professional-looking Portable Document Format (PDF) file from your scanned text.